36+ calculating inter rater reliability

Web Inter Rater Reliability 1122017 Reliability is the consistency or repeatability of your measures William MK. Trochim Reliability and from a methodological perspective is.

Social Web In The Wild

Web Evaluating inter-rater reliability involves having multiple raters assess the same set of items and then comparing the ratings for each item.

. Web The method for calculating inter-rater reliability will depend on the type of data categorical ordinal or continuous and the number of coders. Web Computing Inter-Rater Reliability for Observational Data. Web For Inter-rater Reliability I want to find the sample size for the following problem.

In this simple-to-use calculator you enter in the frequency of agreements. Web In inter-rater reliability calculations from the Title and abstract screening stage maybe votes are counted as yes votes. Web Although the test-retest design is not used to determine inter-rater reliability there are several methods for calculating it.

IRR TA TRR 100 I RR T ATR. Web We suggest an alternative method for estimating intra-rater reliability in the framework of classical test theory by using the dis-attenuation formula for inter-test. Web Real Statistics Data Analysis Tool.

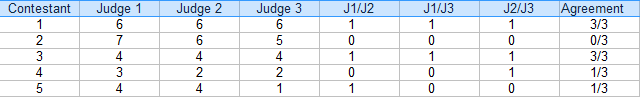

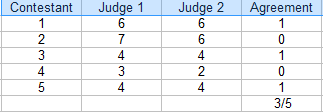

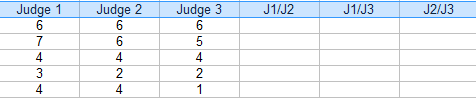

Web How do you calculate interrater reliability for multiple items. Are the ratings a match similar. The following formula is used to calculate the inter-rater reliability between judges or raters.

Web In statistics inter-rater reliability also called by various similar names such as inter-rater agreement inter-rater concordance inter-observer reliability inter-coder reliability and. An Overview and Tutorial Kevin A. Of rater 3 No.

Web Inter-Rater Reliability Formula. Hallgren University of New Mexico Many research designs require the assessment of. Web Use the free Cohens kappa calculator.

The Real Statistics Resource Pack provides the Interrater Reliability data analysis tool which can be used to calculate Cohens Kappa as well as. Please note that only the final set of. Of variables each rater is evaluating 39 confidence level 95.

Web reliability number of agreements number of agreementsdisagreements This calculation is but one method to measure consistency between coders. Web The Statistics Solutions Kappa Calculator assesses the inter-rater reliability of two raters on a target. With this tool you can easily calculate the degree of agreement between two judges during the selection of the studies to be included in a.

Web Interrater reliability measures the agreement between two or more raters. I have responses rated on 12 binary categories treating the categories as separate items on the same measure. Web assess the reliability for 8 raters on 50 cases across 10 variables being rated youd have 10 datasets containing 8 columns and 50 rows 400 cases per dataset 4000 total points.

Pdf Older Adults Knowledge Of Pressure Ulcer Prevention A Prospective Quasi Experimental Study Siobhan Murphy Academia Edu

Pdf Testing The Reliability Of Inter Rater Reliability

Inter Rater Reliability Youtube

Inter Rater Reliability Irr Definition Calculation Statistics How To

Pdf Changes In Mood Craving And Sleep During Acute Abstinence Reported By Male Cocaine Addicts Edward Cone Academia Edu

Sub Group Weighted Inter Rater Reliability Analysis For 2 Raters Youtube

Inter Rater Reliability Using Spss Youtube

Inter Rater Reliability Irr Definition Calculation Statistics How To

Spss Tutorial Inter And Intra Rater Reliability Cohen S Kappa Icc Youtube

Inter Rater Reliability Irr Definition Calculation Statistics How To

Sage Research Methods Best Practices In Quantitative Methods

Spss Tutorial Inter And Intra Rater Reliability Cohen S Kappa Icc Youtube

Cervical Joint Position Error Test Physiopedia

Zq Udp Zjgrrhm

Spss Tutorial Inter And Intra Rater Reliability Cohen S Kappa Icc Youtube

Average Interrater Reliability Of Four Raters Of The Individual Download Table

Calculating Inter Rater Reliability Between 3 Raters Researchgate